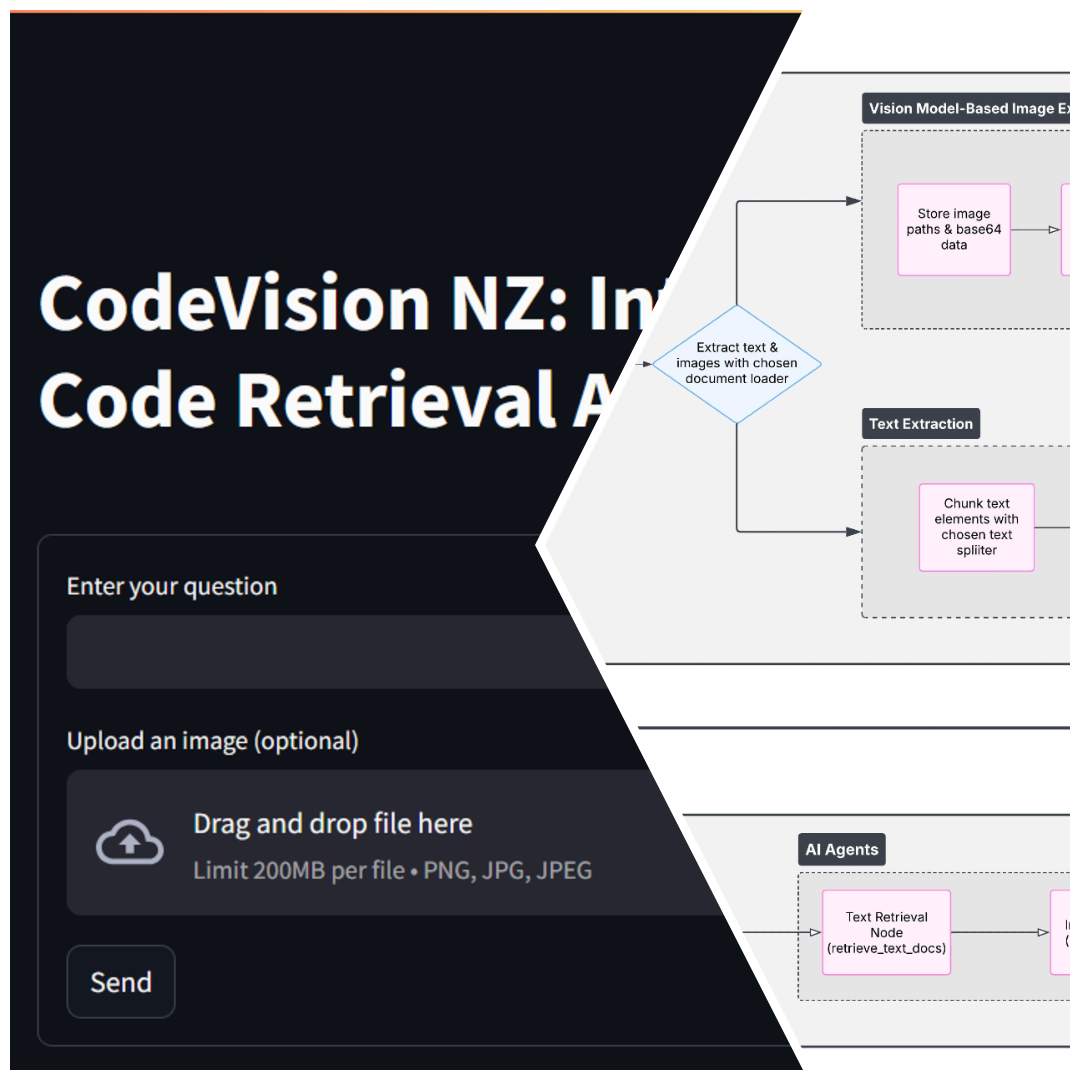

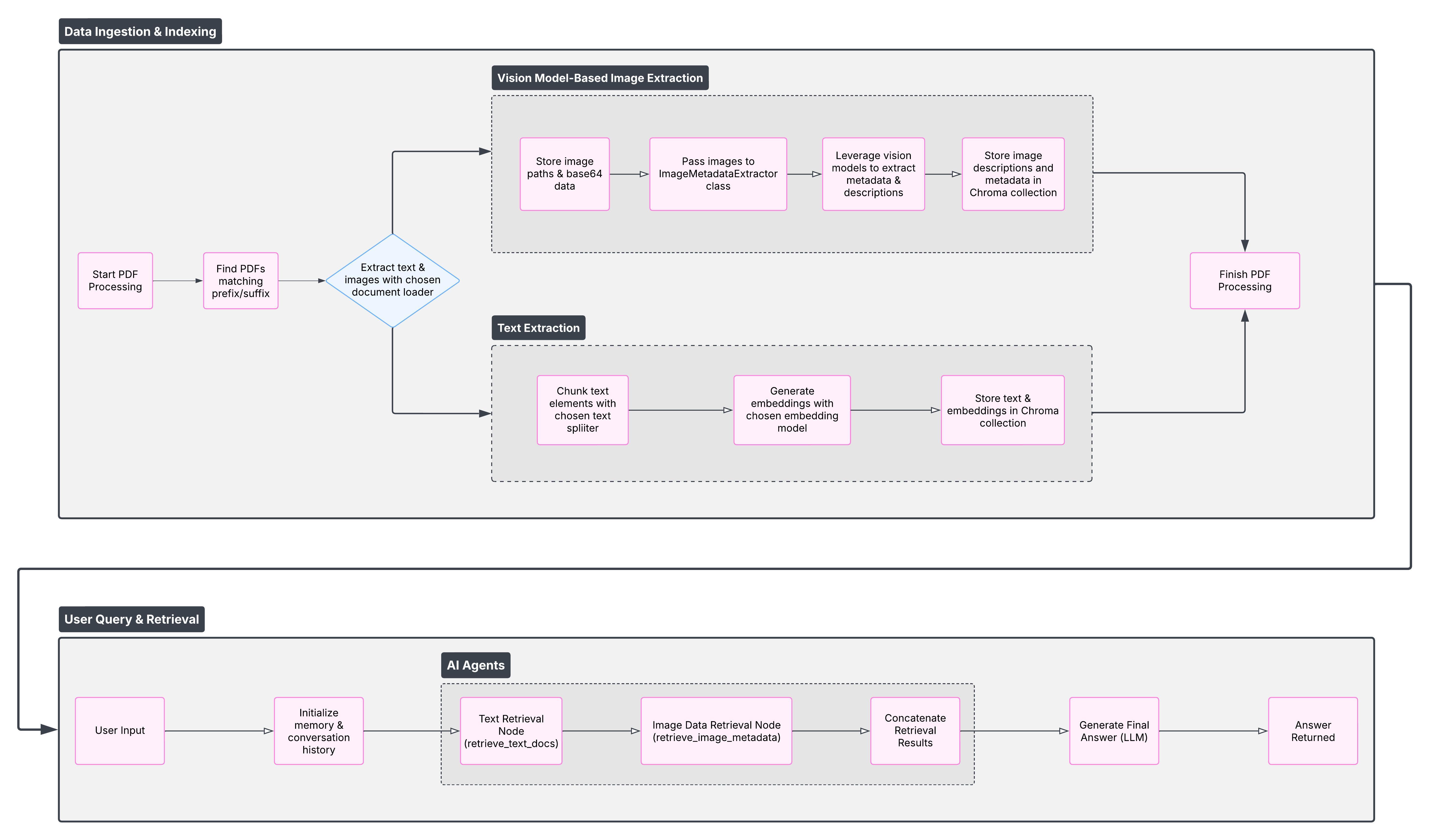

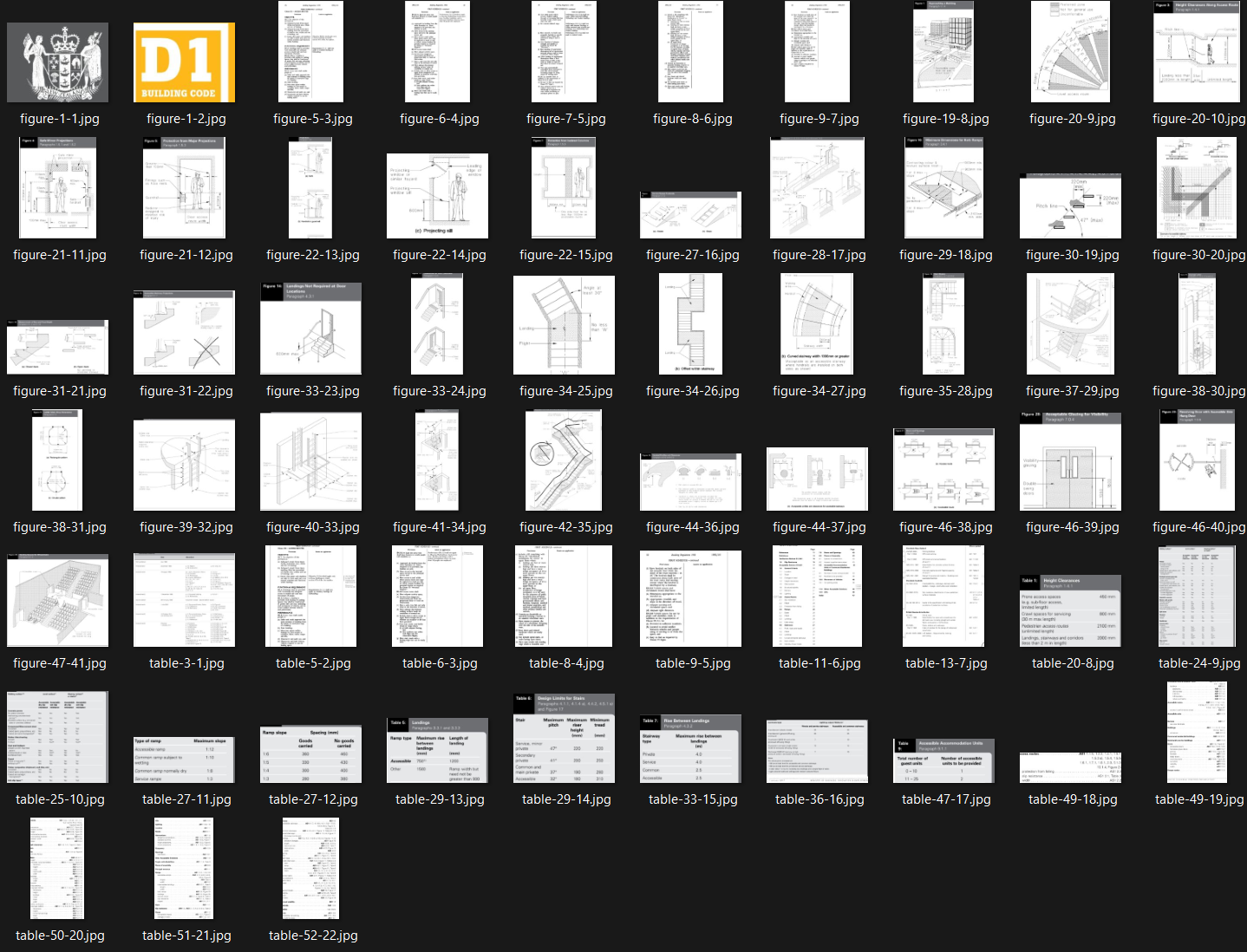

CodeVision NZ is a fully local, multimodal Retrieval-Augmented Generation (RAG) application designed to make the New Zealand Building Code accessible through intelligent, chat-based search. Leveraging Ollama-powered local LLMs and ChromaDB vector storage, the system processes official NZBC PDFs to extract structured text and high-resolution architectural diagrams using unstructured. Users can then query the building code via a sleek Streamlit interface, receiving grounded answers drawn from both clauses and figures—with context and citations included. All processing occurs locally, ensuring data privacy and fast retrieval without the cloud.

Key Objectives

- Multimodal Retrieval – Extract both text and images using vision and language models.

- Precise, Cited Answers – Return accurate responses with references to relevant clauses and diagrams.

- Natural Language Querying – Ask questions in plain English and get clear, contextual answers.

- Local & Secure Deployment – Run entirely offline using open-source tools to ensure full privacy.

- Faster Compliance Checks – Accelerate code research for architects, engineers, and consultants.